Just posted: A near-final draft of my latest paper, Big Data: Destroyer of Informed Consent. It will appear later this year in a special joint issue of the Yale Journal of Health Policy, Law, and Ethics and the Yale Journal of Law and Technology.

Just posted: A near-final draft of my latest paper, Big Data: Destroyer of Informed Consent. It will appear later this year in a special joint issue of the Yale Journal of Health Policy, Law, and Ethics and the Yale Journal of Law and Technology.

Here’s the tentative abstract (I hate writing abstracts):

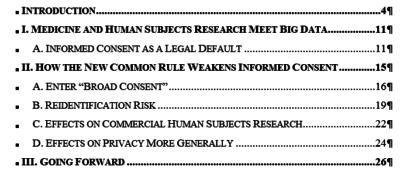

The ‘Revised Common Rule’ took effect on January 21, 2019, marking the first change since 2005 to the federal regulation that governs human subjects research conducted with federal support or in federally supported institutions. The Common Rule had required informed consent before researchers could collect and use identifiable personal health information. While informed consent is far from perfect, it is and was the gold standard for data collection and use policies; the standard in the old Common Rule served an important function as the exemplar for data collection in other contexts.

Unfortunately, true informed consent seems incompatible with modern analytics and ‘Big Data’. Modern analytics hold out the promise of finding unexpected correlations in data; it follows that neither the researcher nor the subject may know what the data collected will be used to discover. In such cases, traditional informed consent in which the researcher fully and carefully explains study goals to subjects is inherently impossible. In response, the Revised Common Rule introduces a new, and less onerous, form of “broad consent” in which human subjects agree to as varied forms of data use and re-use as researchers’ lawyers can squeeze into a consent form. Broad consent paves the way for using identifiable personal health information in modern analytics. But these gains for users of modern analytics come with side-effects, not least a substantial lowering of the aspirational ceiling for other types of information collection, such as in commercial genomic testing.

Continuing improvements in data science also cause a related problem, in that data thought by experimenters to have been de-identified (and thus subject to more relaxed rules about use and re-use) sometimes proves to be re-identifiable after all. The Revised Common Rule fails to take due account of real re-identification risks, especially when DNA is collected. In particular, the Revised Common Rule contemplates storage and re-use of so-called de-identified biospecimins even though these contain DNA that might be re-identifiable with current or foreseeable technology.

Defenders of these aspects of the Revised Common Rule argue that ‘data saves lives’. But even if that claim is as applicable as its proponents assert, the effects of the Revised Common Rule will not be limited to publicly funded health sciences, and its effects will be harmful elsewhere.

This is my second foray into the deep waters where AI meets Health Law. Plus it’s well under 50 pages! (First foray here; somewhat longer.)

However, I am now able to report that some news still shocks and surprises, such as this report from the ACLU,

However, I am now able to report that some news still shocks and surprises, such as this report from the ACLU,